Some Thoughts on Language

Functionalism, Structuralism, and the "Language is Everything" Hypothesis

I've discussed my take on the relationship between Functionalist and Structuralist theories here.

For instance, I think Structuralism might provide explanatory power for Functionalist theories that have sometimes been considered to miss the mark (due to their inability to address those kinds of criticisms).

On the idea that some conception of language may be the best way to build a monistic theory:

- Patterns as understood through the lens of Structuralism and Languages as understood through that conception of language might be synonomous or something very similar to that.

- I still think that a fruitful perspective to take with respect to those philosophical views, since a language (and better yet - meta-linguistic) starting point might dispel several unhelpful analogies, metaphors, and ways of thinking that presently permeate philosophy.

One might take the view that language appears to require something like intent or intentionality but I think a fairly standard Model-Theoretic view reveals that intent and meaning are defined by specific mappings to certain linguistic structures. Language need not be symbolic, it does not need to be spoken, and it does not need an Observer (in some sense: per the Copenhagen Interpretation or even Berkeleyan sense of semantic meaning being set by our being within the Mind of God).

Rules also need not be deliberate norms set by a community of thinking agents/beings. There are natural boundaries or restrictions that arise in nature.

Strictly speaking, I believe that Structuralist patterns (say the patterns that are uncovered in mathematics) are revealed within and through language (are linguistic) but that language even in (and especially in the sense above) exceeds that structuralist conception. One must have non-artificial language obviously as a starting point (indeed we evolved to discover math, geometry, advanced numbers like

0, etc. only after achieving writing) to discover or uncover mathematics as we have. So, given that distinction (thin though it might be), I don't the view just collapses into a form of Structuralism. It's a separate but related view.

Transitive Communication and Other Language Games

There are many language games that people can play (even if they are admonished not to). Consider Transitive Communication:

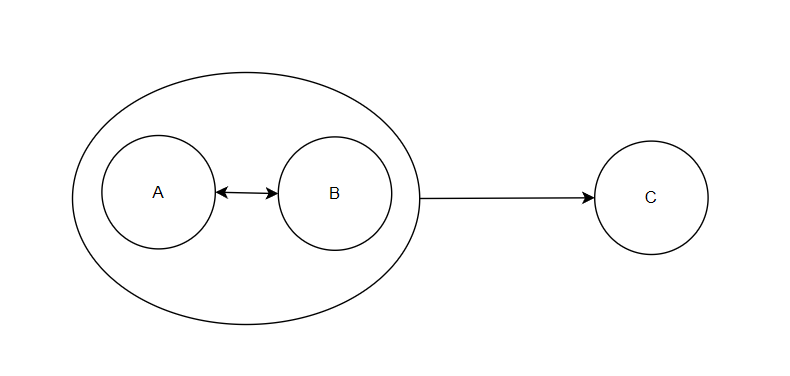

- A group of Engineers discuss a matter and rule on an outcome then elevate only the outcome of the discussion to a higher authority like a Team Lead to approve and implement.

- Two communicate directly with each other in order to communicate indirectly with a third party.

- Interspersing the communication of two contexts into one to blend and make ambiguous the locating contextual factors.

- Using the Media (the institution itself) to indirectly communicate between elites (in the same that one might use a Cellphone).

- Cell phones since they serialize human speech into bytes and then back again. The cell phones and the entire phone provider stack are intermediaries.

- One might for instance use homophones with one clear semantical intent (with respect to a person

A) but with a clear semantical intent with respect to a neighboringB. (Say disposed toward authenticity and satire, respectively.)

These basic kinds of communication are frequently observed in human interactions but have no mention (AFAIK) in the existing Philosophy of Language.

Chiastic Meaning

I was reading the interesting Ph.D. Thesis The Theology of Hathor of Dendera: Aural and Visual Scribal Techniques in the Per-Wer Sanctuary which provides a thorough look at Egyptian writing systems (particularly those inscribed over time on the walls of the Temple of Hathor).

Apparently, Egyptian writing systems both evolved tremendously over time and exhibit certain essential features that we don't find in many other language systems including: chiasmus - a concept which is, has been, and might be of interest to those in Ring and Group Theory.

Today's semantical notions are grounded in simple Model-Theoretic and Predicate Algebraic notions. Chiamus, by contrast, is most akin to sophisticated encryption systems, Markov Chain-inspired Semantics (like Kit Fine's Supervaluationism) from the get-go.

This is perhaps a better starting point in viewing more radical (although chronologically more primary) ways to break apart human writing and language systems to re-engineer how to think, represent, encode, and communicate.

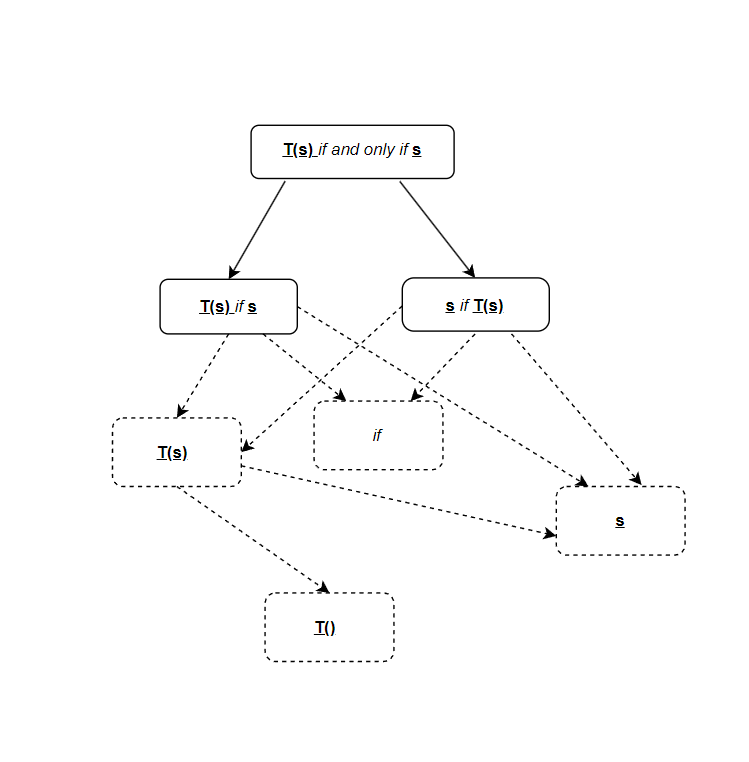

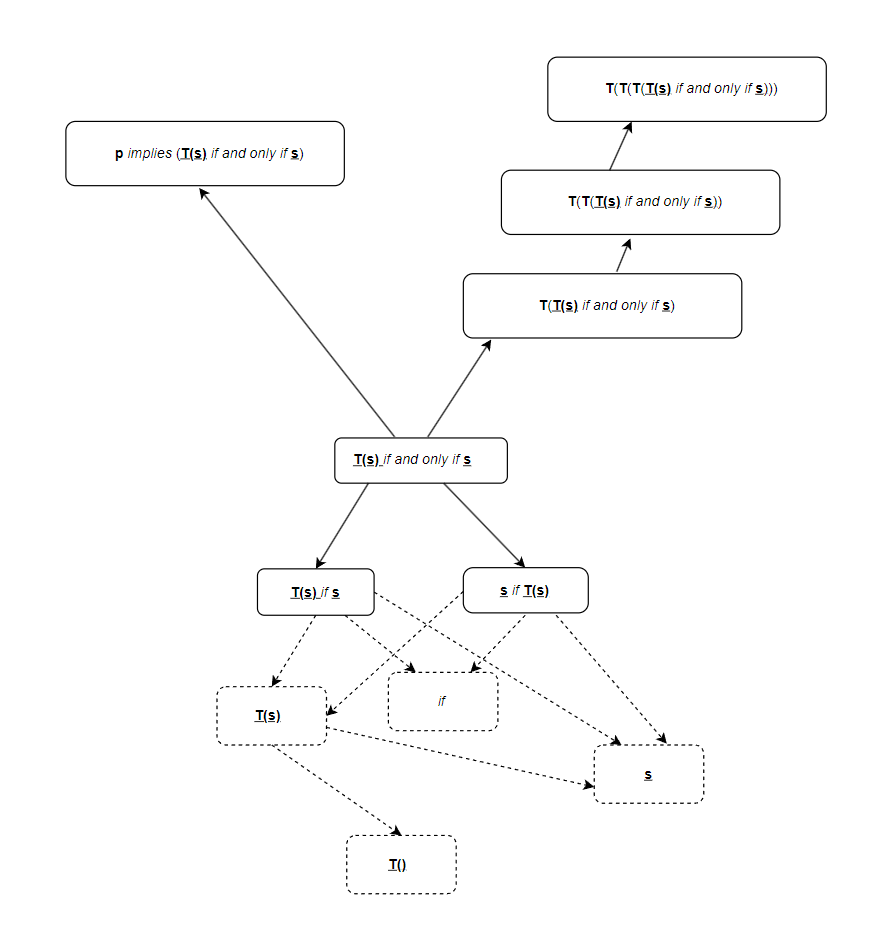

One area of related interest is in the breaking apart of biconditional axiom systems (reflection as a primitive notion and say symmetry all come to mind). In the analysis of Logical Axiomatizations (as opposed to purely philosophical attempts to address the Liar Paradox) we observe the breaking of the T-Schema into two separate halves: Capture and Release.

These obviously form basic Lattice relationships:

The metaphor also applies as we further ascend (indeed, chiastically) above the first ascent from the basic elements of logical language systems (the frothing sea of elemental language) to living-logical systems (or "creatures" if you will). The exact way these are combined gives rise to interesting mathematical properties.

For example, the iterative nature of the Truth-Predicate is chiastic. The biconditionals can also be read forward and backward once we've ascended to the first stage, etc.

C.S. Pierce and Semantic Immuration

I previously introduced a new theory of linguistic meaning (a deliberately crafted theory) I dubbed "Inner Semantics" and rebranded as semantic immuration. According to this view, propositions are not the meaning units of a language but contain the entire language within themselves. (It takes the conventional view and inverts it.)

As it turns there are probably many predecessors that fall under this concept. I mentioned a couple previously. I found another that really excites me from this outstanding paper by Kauffman "The Mathematics of Charles Sanders Peirce" pp. 106:

"In the last part of the quoted passage in the last section Peirce speaks of a hierarchy of Signs and explanations leading eventually to the Sign of itself 'containing its own explanation and those of all its significant parts.'”

While not expressly a Proposition (or equivalent), Peirce apparently considered the importance of the background context in coordinating meanings, uses, and conventions at play in any language system (or resolution of the meaning of Proposition, Impliciture, Implicature, etc.). And in the particulars of the vocabulary, there is a top-level Sign which contains all elements of its explanation.

It's not clear that any such Sign can be understood as an Expression per se since he also emphasizes the "hiding away" of the complete depiction of such:

"In the case of the lambda calculus or the simple infinite nest of circles we see images of this process of enfoldment where a larger external context is kept in the background. It is important to realize the extent to which we will keep such a background hidden for our own convenience!"

Nevertheless, this is a strong additional swing in favor of the view!

Scott Topologies

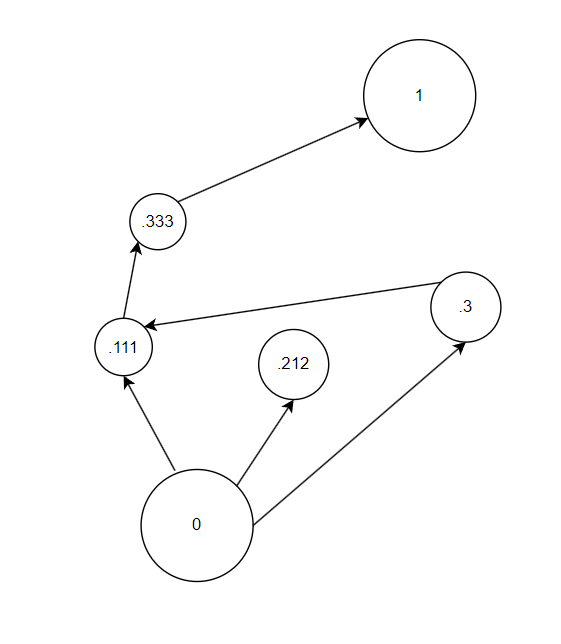

In the above paper (https://homepages.math.uic.edu/~kauffman/Peirce.pdf), Kaufman mentions the awesome Scott Topologies. A simple example would be a Lattice with 0 at the bottom (or ⊥) and 1 (or T) at the top with many continuous functions between the two (and directed from 0 to 1).

Great depictions of some cool Scott Topologies are provided here: https://arxiv.org/pdf/2103.15139.pdf and https://arxiv.org/pdf/2211.15027.pdf

These are super neat for two reasons:

- It turns out that at least some Scott Topologies are Models for or of a Truth-Only Logic. Some of the examples I give (whereby a Single Truth-Value is composed of several recursively contained subsets naturally give rise to Scott Topologies). We can easily construct "Many-Valued Interpretations of Logic" but within the context of a Single Truth value (here each of the Truth Values is a piece or part of a Single-Valued but Comparative Theory of Truth). As far as I know, Scott Topologies provide the underlying machinery or edifice for certain representations of Logic, Logical Relations, etc. but they don't rule decisively on the type of Truth systems that are deployed. For example, consider that it isn't clear if a Single-Value Logic requires continuous functions all the way through. For example:

Above, suppose we have a partially represented Single Truth-Value that doesn't enforce successor ordering and which may have [omitted] discontinuous functions. There is a trivial Scott Topology exhibited by the above but that's not necessarily the whole story.

- I find it interesting that an Expression (or some equivalent) might not just express the entirety of the formal Language definition of which it is a part but also all other Expressions within the Language. We don't have to read continuous homeomorphisms in a Scott Topology continuous function as an Expression per se, but we could (perhaps of a non-Boolean Algebra - some simpler system). Let any point P in the Scott Topological Space be a proposition. Since it's also a continuous mapping, it automatically expresses many others.

That by itself isn't so unusual in the world of finitary logical constructions but in the realm of infinitary constructions, a single proposition can do the work of an infinite-many. (In infinitary extensions of Predicate Calculus this would be say a Proposition containing a Universal Quantifier ranging over all other Propositions within the system - a finite sequence of symbols with infinite semantic content.) This exercise gets us one step closer to unlocking purely relational semantics.

- Kurt Gödel - 14 Posits

- On the Computational Proof for God's Existence

- Comments about philosophy

- My Thoughts

- Default Views

- Another Reply to the Argument from Evil

- Remarks on SCO

- SCO and the Myth of the Given

- Rebranding Some Of My Original Ideas

- The Myth of Privileged Inner Epistemic Access

- Plato's Republic is a Noble Lie

- Regarding Solipsism

- Toolbelt Instrumentalism

- Propositional Propagation

- Some Thoughts on Language

- Fatemakers

- On the Origins of the Concept of Contradiction

post: 12/23/2023

update: 12/24/2023

update: 07/21/2024